Overview

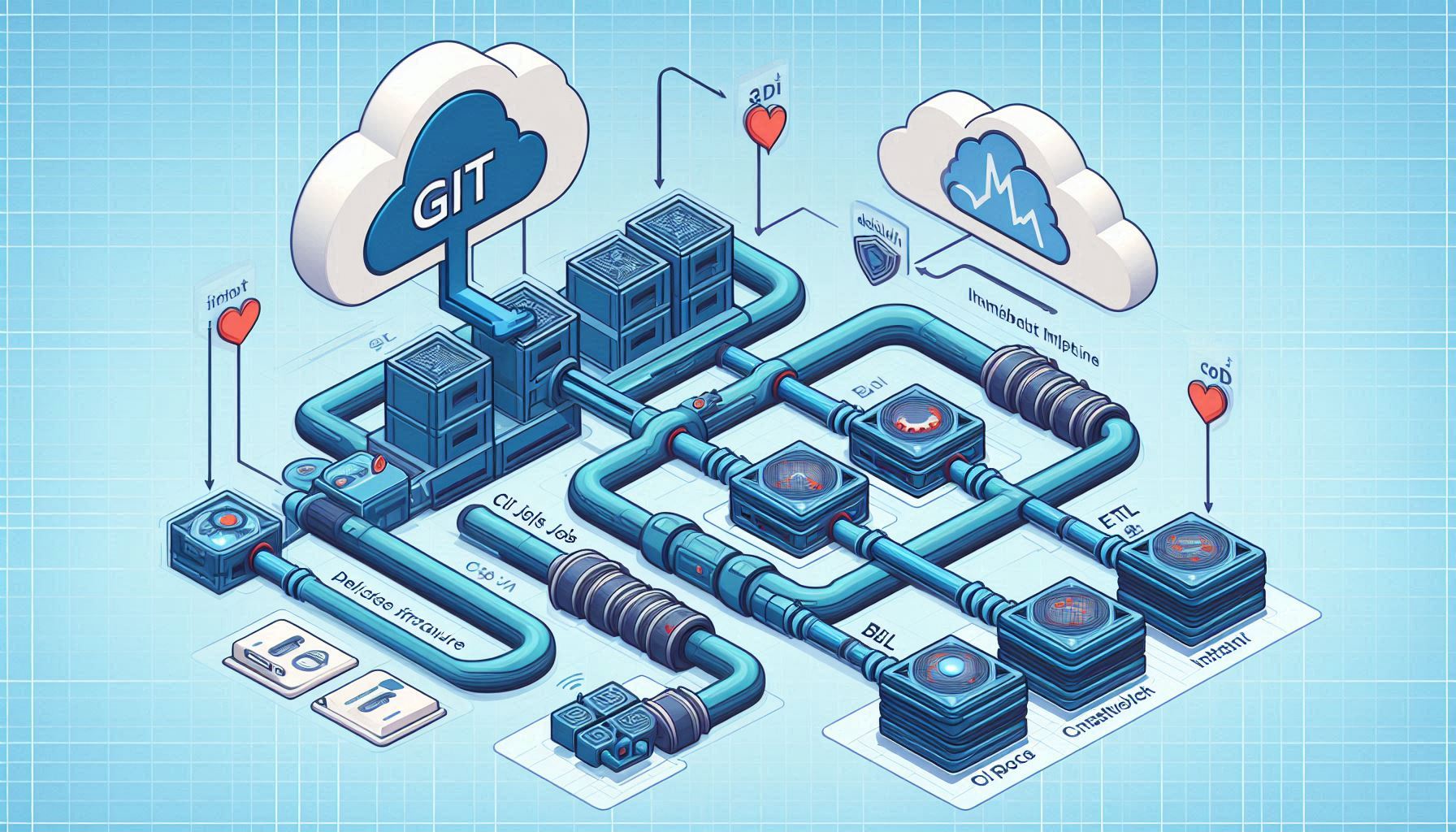

The client had grown over the years with multiple scripts, ETL tools, and BI platforms. Releases were manual, downtime was frequent, and incident response lacked visibility.

Problem

- No consistent CI/CD pipeline for data & analytics.

- Manual deployment steps prone to human error.

- Limited monitoring and alerting for failures.

- Infrastructure changes not versioned or reproducible.

Domain: Multi-system data platform

Scope: DevOps for ETL, data, and BI

Outcome: Automated, observable, stable workloads